Limited data is a common problem when training CNNs in industrial imaging applications. Petra Thanner and Daniel Soukup, from the Austrian Institute of Technology, discuss ways of working with CNNs when data is scarce

Deep learning – or neural networks – has had a triumphal march over the last decade. Since sufficient computing power has become available with GPUs, neural networks have been trained to handle many tasks in various areas, from language processing in text and sound, to image processing, classification, and anomaly detection. Numerous publications are reporting successful adoption of deep learning for various datasets and tasks. Today’s widespread opinion about deep learning is that the only necessary ingredients are a data sample and a task. After that, the neural network is able to identify the relevant patterns in the data all by itself and give highly sophisticated and reliable predictions on complex data problems. Unfortunately, this is not always correct.

Industrial inspection

In industrial inspection, image processing has always been a core discipline. To solve inspection problems during the pre-deep learning era, machine vision engineers often analysed only a handful of image samples and developed elaborate mathematical models that approximately described the visible, relevant geometrical or statistical structures. This was a painstaking procedure and had to be repeated each time new insights emerged. Today’s convolutional neural networks (CNN) promise to make this process much easier and, above all, more cost-effective, since all relevant information is automatically extracted from sample images. And the CNN training procedure can easily be re-run, whenever new and extended training data are available. The only necessary component is computing power.

However, there are also pitfalls with drawing all information only from training samples. Characteristic appearance variations not covered in the training data will probably not be recognised correctly in the CNN’s inference phase. Therefore, training data for neural networks must contain samples covering as many variations in appearance as possible.

While CNNs are indeed remarkably capable of generalising the presented training samples to a certain extent, they also might over-fit on scarce training data, and so jeopardise their prediction performance on unseen data. In addition, object classes that are missing entirely cannot usually be handled properly. Only in special settings, or when special precautions have been taken, will CNNs exhibit some awareness about novelty in the data. This means being able to identify anomalous data with regards to what has been presented during the training phase.

The academic community has compiled multiple thorough and balanced sample datasets, based on which the potential of new deep learning algorithms are explored, tested and published. For industrial applications, large databases – of images of production defects, for example – are not often readily available. Unbalanced and insufficient data contain a bias, so that the CNN over-fits on certain aspects of a task that are covered by the training data, but is blind to aspects that were inadvertently overlooked. Thanks to the amount and complexity of large data, one should always be aware of the potential for data bias. Therefore, training data should be diligently maintained, extended, as well as repeatedly re-training and evaluating the CNNs.

While neural networks require exhaustive data for training, lack of data is an inherent problem for real-world problems. In 2014, a new method for artificial data generation with CNNs was published: generative adversarial networks (GANs). Two CNNs are trained to work against each other, a counterfeiter network and a discriminator network. The counterfeiter network is trained to become better at generating random images, plausibly mimicking the available real training data but providing some new structures. The discriminator is trained for revealing whether images are artificially generated or real data. As the discriminator improves on revealing counterfeits, the generator network improves on generating plausible looking images until the discriminator can’t distinguish between the counterfeit images and the real images.

The emergence of GANs raised hopes that scarce data samples could simply be augmented with enough variation to make up for the lack of real data, for example when training CNNs for inspection tasks. Indeed, in some settings, such as entertainment or fashion applications, remarkable results can be achieved where augmented images are indistinguishable from real images – even humans can’t tell the difference. This work uses relatively large real training databases containing enough structural variation for the GAN to learn. In fact, a GAN cannot generate substantially new artificial data without a clue from real data. For industrial inspection where precision and reliability count, the artificially generated data should be really in line with real image distributions, not only those that look plausible. Nevertheless, even measuring and proving generated data matches real data cannot reliably be accomplished.

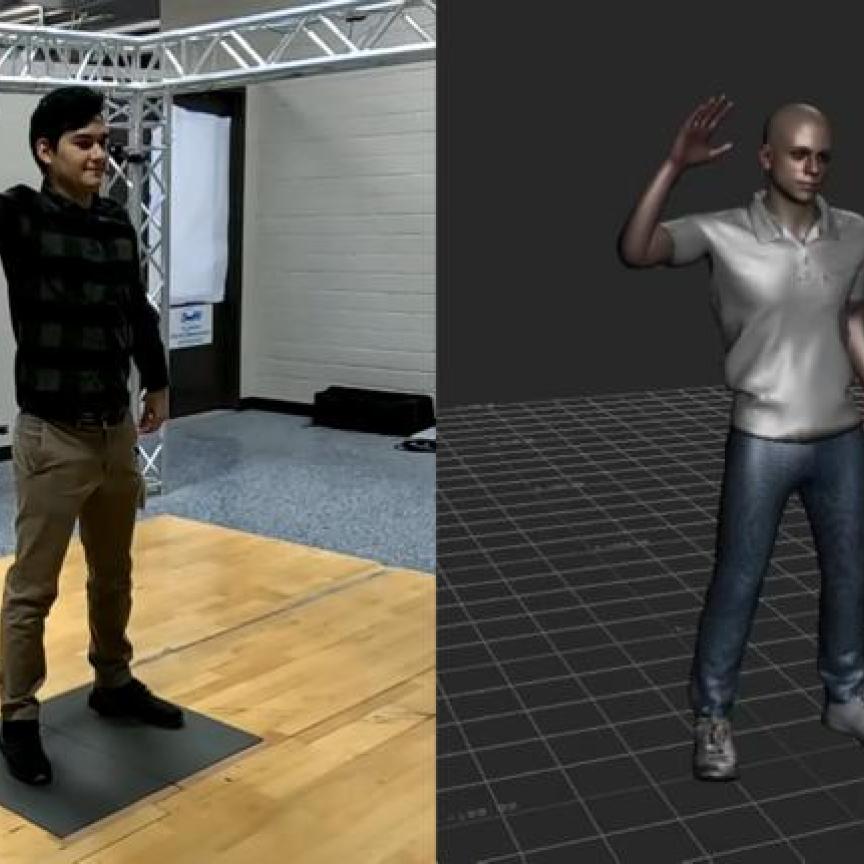

Image: Deep learning neural network undertaking a partial conventional algorithm’s work in only a small part of a 3D image processing pipeline. Poor image quality in the left image is improved with a conventional de-noise algorithm (middle image), but its computational performance is slow and not constant. The neural network (right image) learns to de-noise from the conventional algorithm, and is constant and fast. It can be accelerated thanks to the parallelisation capabilities of neural networks.

For some applications, one way to use a GAN is to make it simpler for the GAN by combining it with conventional artificial data generation methods such as rendering. This generates a large amount of geometrical variation but usually fails to imitate the characteristics of the real image acquisition systems correctly. GANs can be used for doing the so-called style transfer, learning and applying the statistical camera properties to the rendered images without disturbing their geometrical content.

Image statistics are another potential pitfall when working with neural networks. It has been shown in various publications that adversarial noise patterns exist that deteriorate the predictions of CNNs. An image showing a truck is correctly classified as a truck by a CNN, but by adding an adversarial noise pattern to that image the CNN fails, although the image content has not changed visibly from a human perspective. That disturbing vulnerability to adversarial noise has been shown to be inherent to neural networks. Therefore, one must take care of properly setting up the acquisition system and image pre-processing pipelines, to thoroughly ensure that only properly processed data are used.

As deep learning is a fully data-driven method, one is totally dependent on the data quality. In an industrial environment, one might not be willing to entirely rely on the quality of a training dataset. We ask users to integrate deep learning cautiously into diligent data acquisition and image-processing pipelines, potentially using neural networks only in sub-parts, keeping conventional image processing methods whenever they work satisfactorily. For example, modern photometric and multi-view acquisitions, together with computational imaging, might be a valuably enriched input for a CNN to infer with. In that manner, one can exploit the controllability of data quality and information content with image processing, together with the ability to reveal complex, high-dimensional interdependencies, which no other method can grasp like deep learning.

The effort of developing and maintaining a reliable inspection system has not diminished. However, the bulk of the work is now managing and acquiring data instead of designing models: the better the training data, the better the predicted results. With a good representative training database – optimally real, not artificially generated data – deep learning methods are capable of surpassing conventional image processing-based systems by far. Reliably integrating deep learning into an actual industrial visual inspection process requires a lot of experience in deep learning, plus image acquisition and processing.

The high-performance imaging processing group at Austrian Institute of Technology works on industrial inspection aspects of deep learning, with methods such as one-class learning and data augmentation to solve challenges with training neural networks when data is limited. Petra Thanner is senior research engineer, Daniel Soukup is a scientist in the group.

--

Commercial products

Deep learning is now part of several industrial imaging software packages. MVTec Software has enhanced its deep learning technologies in the latest version (v19.05) of Halcon, including deep learning inference that can be executed on CPUs with Arm processor architectures. This allows customers to use deep learning on standard embedded devices.

Deep learning-based object detection has also been improved on Halcon 19.05. The method, which locates and identifies objects by their surrounding rectangles, so-called bounding boxes, now detects the orientation of objects.

Matrox Imaging’s Design Assistant X, the latest edition of the company’s flowchart-based vision application software, includes image classification using deep learning. The classification tool makes use of a convolutional neural network to categorise images of highly textured, naturally varying, and acceptably deformed goods. All inference is performed on a mainstream CPU, eliminating the dependence on third-party neural network libraries and the need for specialised GPU hardware.

Adaptive Vision has released version 4.12 of its software products: Studio, Library and Deep Learning add-on. According to Adaptive Vision’s CEO Michał Czardybon, the most intensive development the company is working on is in the area of deep learning, with a notable change being a three times increase of inference speed in the software’s feature detection tool. There is also the possibility of working with more flexible regions-of-interest in the object classification tool; support for Nvidia RTX cards; and a new method of anomaly detection.

Adaptive Vision is also developing traditional tools and the rapid development environment itself. It has redesigned its minimal program view, where instead of a ‘blocks and connections’ model, the user gets a simplified view with tools connected using named data sources.

Korean firm, Sualab, has upgraded its deep learning software, SuaKit, to version 2.1. SuaKit is a deep learning machine vision library specialising in manufacturing industries.

Four functions have been upgraded or added in SuaKit v2.1: continual learning, using the same model to train for a new object; uncertainty data value provision, which shows the difference between normal and defect images; multi-image analysis, for inspecting various images in a bundle to increase the speed; and one-class learning, for when there is limited images of defects.

Open eVision, from Euresys, now includes a convolutional neural network-based image classification library called EasyDeepLearning. The library has a simple API and the user can benefit from deep learning, with only a few lines of code.

EVT’s EyeVision software now has a deep learning surface inspector, for detecting flaws, damage and impurities on complex functional and aesthetic technical surfaces. The self-learning algorithm works directly on live images. Local training of an undefined number of classifications is used for evaluation of the image.

Processing in 3D

Along with improvements in deep learning algorithms, the new Halcon release from MVTec offers several enhancements for surface-based 3D matching. This means that additional parameters can be used to better inspect the quality of 3D edges, resulting in more robust matching – especially in the case of noisy 3D data.

Credit: Photoneo

Matrox Design Assistant X now also includes a photometric stereo tool, which creates a composite image from a series of images taken with light coming in from different directions.

Meanwhile, Photoneo has released its Bin Picking Studio 1.2.0, which can configure multiple vision systems and up to four picking objects in a single solution. The software also integrates a localisation configurator for configuring and verifying Photoneo’s localisation parameters. There is a new 3D visualisation tool on the deployment page. Besides the robot with its environment and point cloud representing the scene, the localised parts are present, including a colour code and related statutes.

Finally, Opto Engineering has released Fabimage Studio, a software tool to assist machine vision engineers when creating an application. It follows a natural logic flow – from input to output – combined with a powerful library. Opto Engineering supports engineers in low-level programming with general purpose libraries (FabImage Library Suite) and specific, application-related libraries: TCLIB Suite, dedicated to setup and optimisation of telecentric systems (alignment of lens and light, alignment of object plane, focusing and distortion removal), and 360LIB Suite, developed to suit all needs of 360° lenses for single-camera inspection of cavities and lateral surfaces.