A standard video camera and a heat-sensitive camera team up with artificial intelligence to replace identifying features with stick figures in real-time, meaning technologies driven by camera-based sensors would hold no personal identification.

As consumers, we share a love/hate relationship with smart technology. While we enjoy the benefits brought to us by image sensors such as home security systems and robot vacuum cleaners, we shudder at the thought of being constantly recorded – and kept on digital file forever.

For the most part, we manage to forget the camera, and the digital records it keeps and uploads to the cloud, are even there, enabling us to live comfortably. But with technology becoming smaller and cheaper, camera-equipped devices are more prevalent than ever before, and so is the threat of exposure.

Privacy leaks of sensitive personal footage

“Most consumers do not think about what happens to the data collected by their favourite smart home devices,” said Alanson Sample, an associate professor of computer science and author of a study on PrivacyLens – a device that seeks a solution. “In most cases, raw audio, images and videos are being streamed off these devices to the manufacturers’ cloud-based servers, regardless of whether or not the data is actually needed for the end application.”

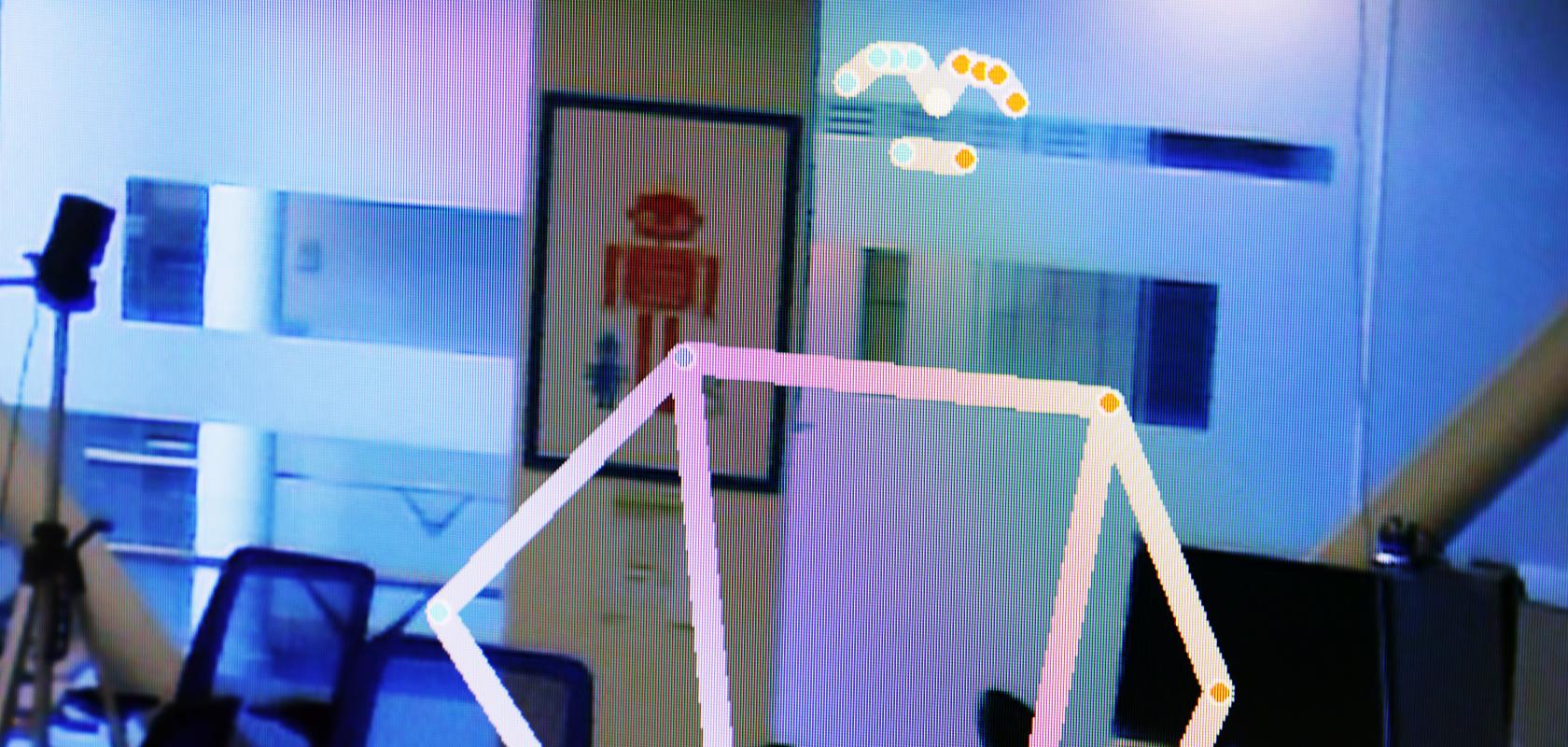

Creating a solution to this little-discussed but often-feared issue, PrivacyLens was developed by engineers at the University of Michigan by combining a standard video camera with a heat-sensitive camera that identifies a person’s location from their body temperature. Using that locational data, the subject’s likeness is then replaced by a generic stick figure, which continues to mimic the real-time movements of the subject.

By making the transformation in-camera, before anything is uploaded to the cloud, the device continues to detect the presence of a person/stick figure and continues to operate normally, but crucially without revealing the subject’s identity. This is because the raw photos are not stored anywhere on the device or the cloud, eliminating access to unprocessed images and making users more comfortable around camera-dependent smart devices.

Safer private health monitoring

“Cameras provide rich information to monitor health,” said Yasha Iravantchi, who presented PrivacyLens at the Privacy Enhancing Technologies Symposium in Bristol, UK. They “help track exercise habits and other activities, or call for help when an elderly person falls. But [smart camera technology] presents an ethical dilemma. Without privacy mitigations, we present a situation where they must weigh giving up their privacy in exchange for good chronic care. This device could allow us to get valuable medical data while preserving patient privacy.”

Privacy outside the home

With an included privacy scale, users are able to control how much of their images are sensed and in which locations and situations. “People might feel [more] comfortable only blurring their face when in the kitchen,” said Sample, for example, “but in other parts of the home may want their whole body removed.”

As well as making users feel safe inside their own homes while retaining their privacy, the device can also be used in combination with smart cameras outside the home, too. PrivacyLens could potentially be used to stop autonomous vehicles from becoming surveillance drones and help those collecting environmental data find privacy laws easier to comply with.