A new approach that enables robots to concentrate on the most significant objects in cluttered environments has been developed by MIT. The research is poised to upgrade machine vision applications across industry.

One of the most critical challenges in the evolving field of machine vision and automation, is a robot’s ability to identify and prioritise objects in complex environments such as messy scenes with a variety of object types, shapes and colours. As industries such as manufacturing, logistics and healthcare are increasingly coming to rely on more automated systems, the need for more efficient robotics is becoming more urgent.

To address the issue, MIT researchers have introduced a novel method that enables robots to filter through cluttered scenes, and quickly focus on objects deemed of more relevance to a specific search, potentially revolutionising the way they operate and perform.

Traditionally, object-recognition algorithms tend to indiscriminately analyse everything in their field-of-view, even when faced with visually busy or crowded environments, without first determining each object’s relevance. But by quickly focusing on only the objects that are most relevant to a search, MIT’s new technique named Clio wastes less unnecessary data processing on the task.

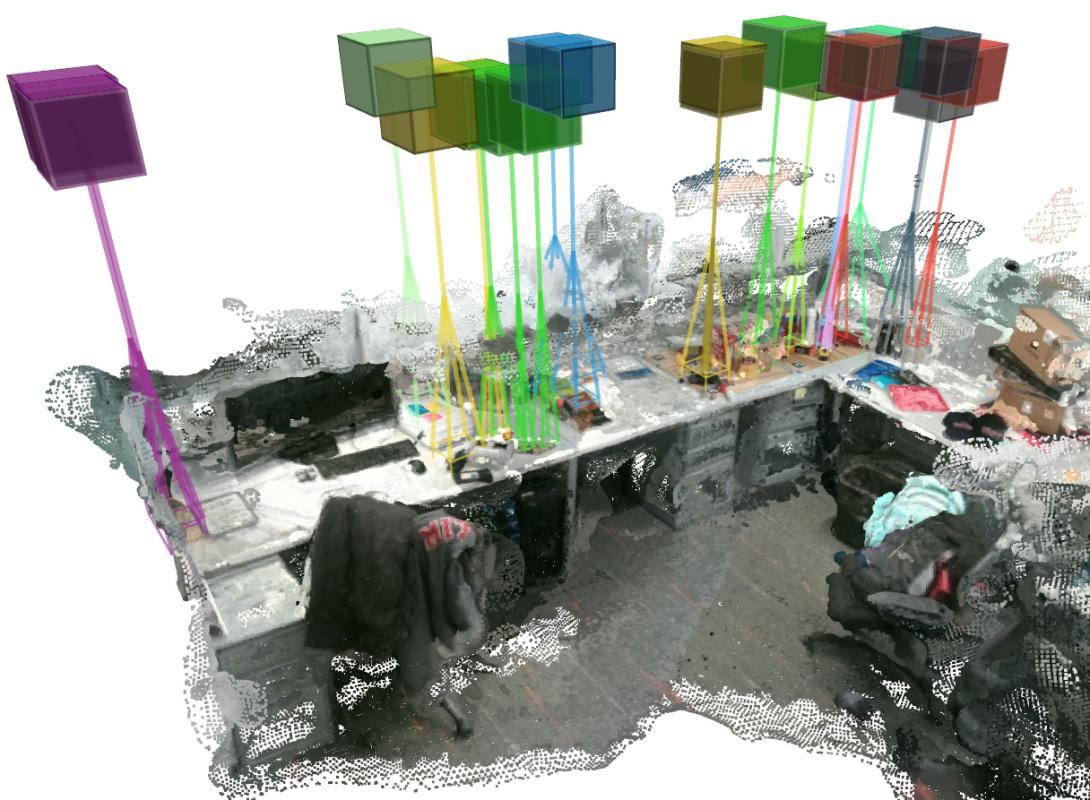

A cluttered office cubicle is quickly segmented by Clio. The coloured cubes represent segment regions Clio has identified as including task-relevant objects to focus on, while ignoring the rest of the scene. Image: Courtesy of the researchers

Information bottleneck in open-set recognition

Advances in computer vision and language processing have allows robots to begin to identify individual objects. But this was only possible in carefully curated and controlled environments, using objects selected from a set few the robot has been pretrained to recognise. The field of open-set recognition, has allowed researchers to use deep-learning tools to train neural networks from billions of open image-text pairings from the internet.

The remaining challenge is for robots to know how to first categorise objects during its initial search stages, then discount the irrelevant ones. “Typical methods pick some arbitrary fixed level of granularity to fuse segments of a scene into what it considers as one object,” says Dominic Maggio, co-author of the research. But “if that granularity is fixed without considering the tasks, the robot may end up with a map that isn’t useful.”

Clio maps the task-relevant objects in Spot's surroundings in real-time, allowing the quadru-bot to 'pick up orange backback'. Image: Courtesy of the researchers

How does Clio choose what to focus on?

Named after the Greek muse of history, due to its ability to remember only the elements that matter for a given task, Clio determines the level of granularity required to interpret its surroundings, remembering only the parts of a scene that are relevant, making it useful for many real-time decision-making applications.

“Search and rescue is the motivating application for this work,” said Luca Carlone, Associate Professor at MIT’s Department of Aeronautics and Astronautics, “but Clio can also power domestic robots and working on a factory floor alongside humans. It’s really about helping the robot understand the environment and what it has to remember in order to carry out its mission.”

Testing Clio in natural environments

“What we thought would be a really no-nonsense experiment would be to run Clio in my apartment, where I didn’t do any cleaning beforehand,” says Maggio. After giving Clio images of the cluttered apartment an a list of natural-language tasks such as ‘move pile of clothes’, Clio was about to quickly segment scenes, feed them through in algorithm and find those with piles of clothes.

In another experiment, the team ran Clio on Boston Dynamics’ quadruped robot, Spot. As spot mapped an office building’s interior, Clio picked out segments from mapped scenes that visually related to the given task, then generated an overlaying map showing just the target objects.

In the future, the team will adapt Clio to handle higher-level tasks: “For search and rescue,” says Maggio, for example, “you need to give it more high-level tasks like ‘find survivors,’ or ‘get power back on’. So we want to get a more human-level understanding of how to accomplish more complex tasks.”