The story of 3D vision is an interesting one that began with simple observation and led to some very complex technology. Starting with early scholars such as Euclid and Wheatstone, who explored human depth perception, the field has evolved and today, 3D vision is everywhere, from robotics, to automotive, medicine and much more. And it is here to stay.

Ed Goffin, Vice President of Product Marketing at Pleora Technologies explains: "When you look at market data the growth of 3D imaging over recent years is very noticeable. As logistics, robotics, manufacturing and other markets adopt 3D it’s moving quite quickly from a niche to a commodity. That presents a ‘good news, bad news’ scenario for designers. As the market grows, so does price pressure.”

3D vision: The technological challenges

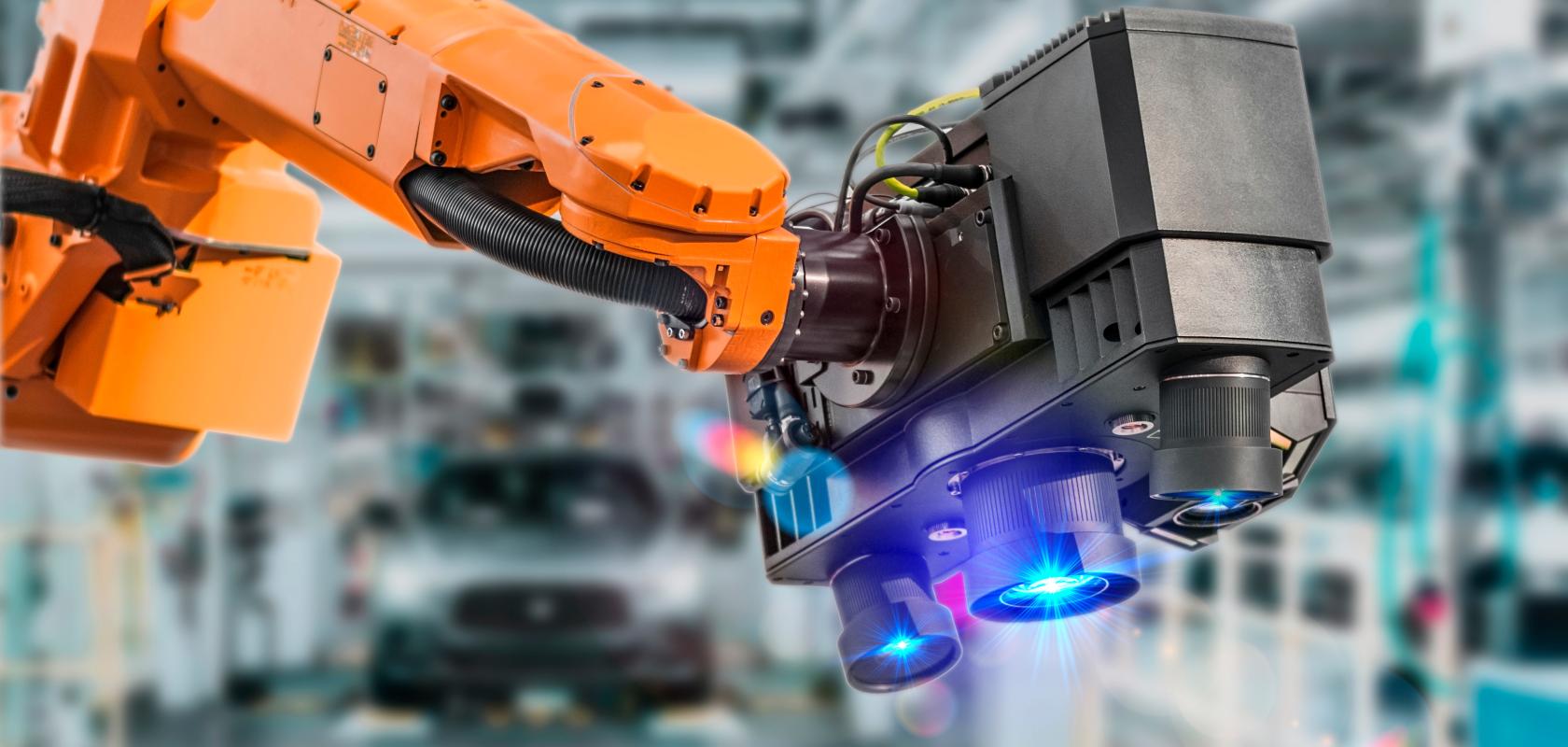

The journey of 3D vision is essentially about solving complex technological challenges. At its core, the technology aims to capture depth information alongside visual data to provide more detail than traditional imaging. For example, 3D imaging allows an autonomous mobile robot (AMR) to navigate an ever-changing manufacturing floor or a farmer’s field. In the push towards automation, 3D is a key technology for robotic bin-picking where three-dimension vision is crucial to make specific “picks” from a mixture of goods.

3D is a key technology for robotic bin-picking where three-dimension vision is crucial to make specific “picks” from a mixture of goods (Credit: Pleora Technologies)

As the market for 3D imaging grows, there are a few critical challenges for designers to consider, including system integration and footprint. This is where companies like Pleora play a key role in providing key solutions to device designers and integrators that enable innovation.

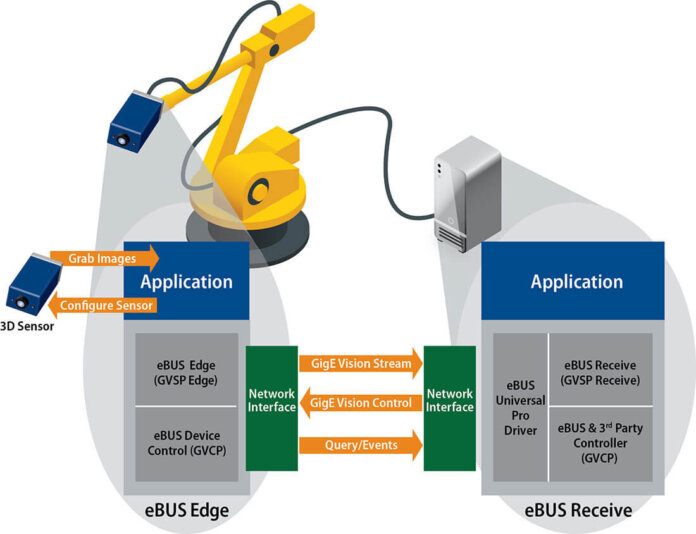

The company’s eBUS Edge software is a prime example of this approach. A comprehensive transmit solution, it provides designers with a software-only approach to integrate GigE Vision connectivity into imaging devices including 3D, smart, and embedded solutions. At the receiving side, with eBUS SDK the company offers a novel approach that significantly reduces CPU requirements to ensure high-reliability, low-latency performance. Goffin explains: "eBUS Edge converts any imaging device into a GigE Vision, GenICam compliant solution without requiring any additional hardware. It’s a software-only approach that allows 3D imaging devices to integrate with any standards-compliant image processing solution." Critically, says Goffin, "designers can upgrade existing 3D devices, or develop new smaller footprint solutions that interoperate seamlessly with machine vision processing.”

In 3D applications one key challenge is integrating custom imaging devices with machine vision processing. Software solutions, including Pleora’s eBUS Edge, convert 3D cameras into GigE Vision standard compliant devices that work with off-the-shelf processing tools (Credit: Pleora Technologies)

Overcoming barriers to adoption with standardisation

In many ways, today’s evolution of 3D imaging parallels the growth of the broader machine vision technology over the previous two decades, where standardisation played an important role in driving the wider scale adoption of the technology across multiple end-markets.

“Until recently 3D often relied on proprietary transport mechanisms,” explains James Falconer, Product Manager with Pleora. “These were often based on Ethernet, but there was no defined approach to sending 3D data, resulting in a number of closed systems. We’re seeing a push both from imaging device manufacturers and system integrators to move to a standards-based technology with GigE Vision as the underlying transport layer.”

Compounding the problem, transmitting the imaging information alongside the required data for 3D is not trivial. Falconer explains: “With multi-part payload transmission for 3D, different types of data need to be transmitted in a single container so it can be processed by a single receiver. For example, in 3D you need to bring together images from a left camera, a right camera, a 3D depth map, a confidence map, along with chunk data that includes additional sensor information.”

In response to the growing demand for 3D, the GigE Vision and GenICam standards address multi-part transfer to help with interoperability. “The benefit for the device designer or system integrator is interoperability,” explains Falconer. “If the end-customer wants to use 3D imaging solutions from one vendor and image processing software from another there’s a common approach to how imaging and data is transported.”

Standardisation has played a key role in encouraging new vendors to offer 3D solutions, which has resulted in a more competitive market that makes the technology a viable proposition for end-users. Advances in software processing, and more significantly plug-and-play interoperability and ease-of-use, are also making it more attractive for end-users to upgrade from 2D to 3D solutions.

Footprint and cost challenges for 3D imaging

Many of the fastest growing markets for 3D solutions add an additional design challenge, where the size and weight demand of the end solution dictate smaller, networked devices. For logistics applications, automated guided vehicles (AGVs) that follow a fixed route, and autonomous mobile robots (AMRs) that navigate independently, demand smaller form factor imaging devices that are networked to make a local processing decision. These autonomous driving systems are deployed on manufacturing floors to move parts, and are also finding new applications in logistics, sorting, and agriculture.

“Reducing footprint is a key criteria for these types of systems, where designers simply do not have enough space to integrate interface hardware,” explains Falconer. “A software transmitter approach enables the design of compact imaging devices, while standardising on GigE Vision enables the networking of multiple sensors, and different types of sensors, with onboard machine vision processing. Beyond 3D we’re seeing eBUS Edge designed into smart cameras and embedded sensor applications for the same reasons, where designers need to address similar form factor and interoperability requirements.”

In addition, there are ways that designers and integrators can find additional cost advantages as 3D reaches more attractive price points. End-users can replace multiple 2D cameras, which they have traditionally used to build a 3D vision system, with just one or two 3D cameras. This provides substantial installation and cost advantages. Furthermore, with advancements in software, ease of use, and increasingly plug-and-play functionalities, more end-users can now consider upgrading from 2D to 3D vision solutions. There is also greater emphasis on providing complete 3D solutions that integrate with standard machine vision processing.

A cost-effective software solution to 3D vision

Pleora has worked extensively with an industrial imaging company that designs robotic 3D systems that inspect cars for cosmetic defects during manufacturing. The designer has unique technology that inspects reflective surfaces on the vehicle, such as metals, plastics, and ceramics, using 3D fringe projection.

In this application, a sequence structured light pattern is projected on the surface. Specialised area scan cameras use this pattern to search for corresponding points and generate a 3D point cloud that is transmitted for processing. eBUS Edge supports standardised multi-source data streaming to transmit multiple sources/stream channels simultaneously. Falconer explains: “One of their primary requirements is to convert the existing area scan sensor interface to GigE Vision so their end-customers can use off-the-shelf machine vision processing. Converting the device to GigE Vision using eBUS Edge meets standards requirements for interoperability, without adding additional hardware to a small footprint, mobile inspection system.”

A glimpse into the future

Goffin predicts continuing growth in new imaging applications will drive demand for software-based interface approaches: “I don’t envy imaging device designers. Just look at the key growth areas for the machine vision market, and the trend is definitely towards systems that incorporate a number of lower cost, smaller footprint, networked imaging devices making real-time decisions. Autonomous robotics and 3D imaging are just one example. Designers need to be able to address these performance demands, while also ensuring interoperability in increasingly complex systems.”

Find out more about the design and deployment advantages for 3D imaging devices

in this new White Paper from Pleora Technologies. It explores the technological landscape of 3D machine vision, including the key challenges facing 3D imaging device design and cost. It highlights Pleora’s eBUS Edge software as a cost-effective, scalable, and standards-compliant solution to reduce design costs and ease integration for 3D imaging devices.