Supplier of neuromorphic intelligence and application solutions SynSense has launched the Speck Demo Kit, which allows users to deploy and validate event-based neuromorphic vision applications.

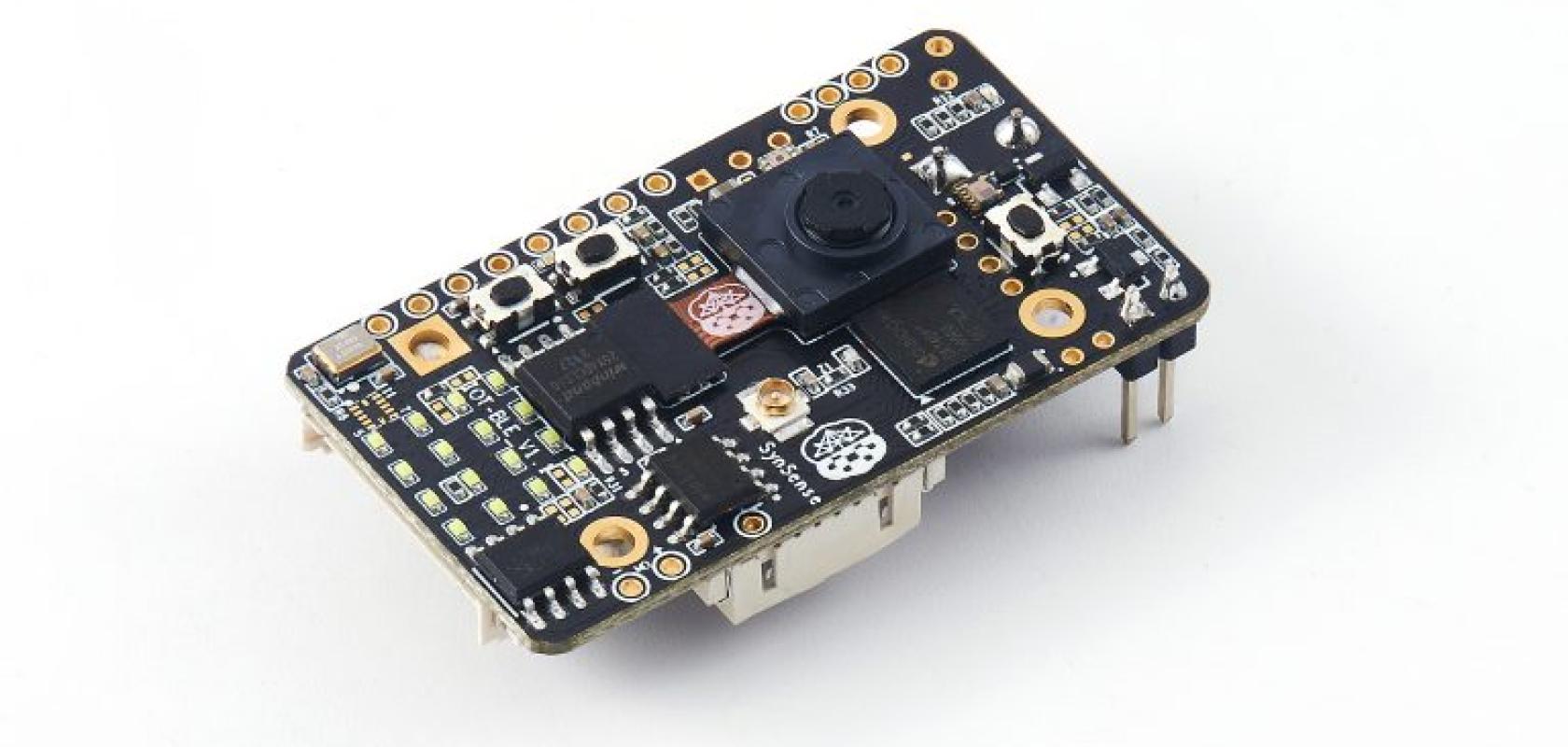

The kit incorporates SynSense’s ultra-low-power dynamic vision Speck module, along with an ultra-low-power Bluetooth controller chip and commonly used peripherals. It serves as a simplified embedded hardware platform, the firm says, providing early access for users to test and verify neuromorphic application models and create prototypes.

The Speck Demo Kit, which integrates SynSense’s new dynamic vision module and application model resources, is designed for industrial and consumer development.

The Speck SoC is a fully-integrated, multi-core, single-chip sensor+processor featuring an integrated 128*128 dynamic vision sensor imaging array for real-time low-power vision processing for mobile and IOT applications. It performs intelligent scene analysis at micro-power levels (<10mW) with real-time response (<200ms). It is fully configurable with a network capacity of 0.32 million neurons. The ultra-low-power and ultra-low-latency capabilities of Speck pave the way for always-on IOT and edge-computing applications such as gesture recognition, face and object detection, tracking and surveillance.