The market for artificial intelligence (AI) in vision-guided robotics (VGR) is growing rapidly, driven by the need for automation in industries such as manufacturing, logistics, and healthcare.

According to a recent report by SNS Insider, the global robotic vision market is expected to approach $5 billion by 2030, thanks to nearly double-digit compound annual growth (CAGR).

Vision systems powered by AI have become an essential component of robotic automation, enabling robots to perceive, process, and interact with their environment more efficiently, accurately, and flexibly.

Set against the current growth in AI research and applications, the demand for AI-powered VGR systems is also increasing, as companies seek to improve their productivity, reduce costs, and improve the quality of their products.

However, there are also challenges associated with the adoption of AI in VGR, such as the need for skilled personnel to develop and maintain the systems, and concerns about job displacement. One of the key benefits of AI in VGR is ease of use, as AI algorithms enable robots to learn from human operators and perform complex tasks with minimal programming. Additionally, AI improves the stability and accuracy of VGR systems, reducing error and improving product quality. Another benefit of AI in VGR is the ability to detect non-rigid objects, such as fabrics and liquids, which are difficult to handle with traditional VGR systems.

Oliver Selby, Fanuc’s Head of Sales, says: “We are now seeing more robots used in agriculture to pick fruit or vegetables, and AI is also being used to ensure that fruit has grown sufficiently.”

Fanuc uses AI-powered tools to improve accuracy, reduce programming time, and improve the overall stability of its systems. It believes that AI technology can help to overcome the limitations of traditional VGR systems and bring new levels of accuracy and efficiency to manufacturing operations.

With its extensive experience in robotics and automation, Fanuc has been able to develop a range of tools that can be easily integrated into existing VGR systems. These include an AI error-proofing application, which is designed to detect and prevent errors before they occur, ensuring that products meet the required specifications. The system uses AI algorithms to analyse data from sensors and cameras, identifying any deviations from the required parameters. The system can then take corrective action, such as stopping a production process or adjusting a robot’s movements. This reduces the risk of defects and improves the overall quality of the final products.

Fanuc’s Head of Sales, Oliver Selby, is now seeing more interest among industry customers in exploring the benefits of AI (Image: FANUC)

Fanuc also developed an AI-driven box palletising tool, to improve the efficiency of packaging operations. It analyses data from sensors and cameras to optimise the placement of products on pallets, reducing the time and effort required for manual sorting and arranging. It can also automatically adjust the robot’s movements based on the size and shape of the products, ensuring that they are placed in the correct position. This reduces the risk of damage to the products and improves the speed and accuracy of the packaging process.

Stronger together: robotics and imaging firms

With the increasing enthusiasm for AI in VGR among factory customers, collaboration between robotics firms and imaging and machine vision firms has become critical for the integration of advanced AI-powered vision systems into the production line. For example, Kuka and Roboception have partnered to develop AI-powered 3D vision solutions for VGR systems, resulting in the development of the rc_visard 3D stereo sensor. This uses AI algorithms to recognise the position and orientation of objects in 3D space. The system can provide the robot with the appropriate gripping points, allowing it to manipulate objects with greater accuracy and efficiency.

One successful implementation of Kuka and Roboception’s AI-driven VGR system is at Danfoss, a Danish manufacturer of mobile hydraulics and electronic components. It has been used to automate the handling of up to 100 different components without manual intervention. The system uses the rc_visard 3D stereo sensor to detect the position and orientation of objects on a flat paper surface, providing the robot with the appropriate gripping points. The system has significantly improved the efficiency and stability of Danfoss’s production processes, while also ensuring greater safety for its employees.

AI offers a bright future for VGR

Overall, the applications that will benefit the most from AI in VGR are those that require high levels of accuracy, efficiency, and flexibility, such as inspection, packaging, and collaborative applications.

Given the commitment of major robotics players to AI, it’s set to play a significant role in the future of VGR. Furthermore, the use of AI in VGR has led to advancements in object recognition and detection, particularly in non-rigid objects such as food products or textiles. With traditional vision systems, it was often difficult to accurately detect and handle these types of objects due to their deformable nature. However, AI-powered VGR systems have the ability to recognise and handle these objects with ease, allowing for increased efficiency and accuracy in industries such as food processing and textile manufacturing.

Whether it’s Fanuc’s AI-driven tools for error proofing and box palletising, or Kuka/Roboception’s AI-powered 3D vision solutions, a common theme is the ability of AI in VGR to do things “without manual intervention”. This presents significant market implications. Enhanced automation capabilities can lead to increased productivity and cost savings for manufacturers. With AI-driven tools that optimise processes, companies can reduce manual labour, minimise human error, and streamline operations. This increased efficiency could result in higher profit margins for businesses and foster greater competitiveness within the industry.

Fanuc’s box palletising tool incorporates AI to improve the efficiency of packaging operations (Image: Shutterstock/Blue Planet Studio)

In inspection applications, AI can accurately detect defects or flaws in products, ensuring higher quality and reducing the need for manual inspections. In packaging, AI can facilitate proper handling and packaging of products, reducing damage during transport and ultimately improving customer satisfaction.

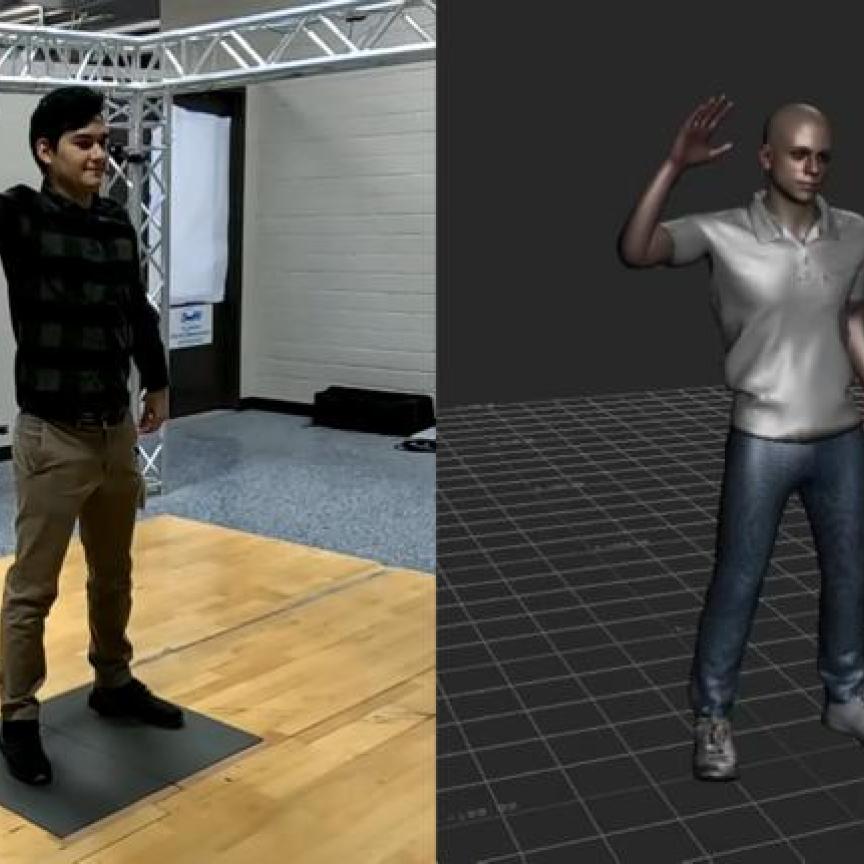

Collaborative applications, in which humans work alongside robots on production lines, stand to benefit significantly from AI integration. AI can enhance worker safety by monitoring and managing robot movements, ensuring that they do not pose a threat to their human counterparts.

Furthermore, AI can optimise the production process by learning from human operators, resulting in a more efficient and harmonious workflow.

Selby says: “With the assistance of AI, set-up times can be shortened as less knowledge of vision theory is required. This allows more end-user employees to utilise the technology without the need to hire specialists, making it particularly cost-effective for small batch production where the product or environment frequently changes.”

The growing adoption of AI in VGR could also stimulate innovation and investment within the robotics and automation industry. As more companies recognise the potential benefits of AI-driven VGR, demand for advanced robotic systems and software solutions is likely to increase. This demand could encourage research and development efforts, leading to further advancements in AI and robotics technologies.

With the adoption of AI in VGR by firms such as Fanuc, Kuka, and Roboception, the potential for further development and innovation in this field is immense. While firms such as these are clear on the economic case for greater use of AI, their messaging does not address the effect on human labour except to highlight safety improvements and the reduced need for human expertise in operating AI-based systems.

Selby is generally optimistic about the way that people are responding to the dawn of the age of AI in the workplace: “In the past, people were reluctant to use AI applications. However, with the emergence of ChatGPT and other AI tools, customers have come to appreciate the advantages of AI in their daily lives. As a result, they are now more interested in exploring the benefits of AI in industry, too.

“Furthermore, there are situations where AI is the only viable solution to a problem. In such cases, customers have no choice but to rely on AI technology to address their needs.”

The textile and garment industry is facing major challenges with current supply chain and energy issues. e future recovery is also threatened by factors that hinder production, such as labour and equipment shortages, which put the industry under additional pressure. Its competitiveness, especially in a global context, depends on how affected companies respond to these framework conditions.

One solution is to move the production of clothing back to Europe in an economically viable way. Shorter transport routes and the associated significant savings in transport costs and greenhouse gases speak in favour of this. On the other hand, the related higher wage costs and the prevailing shortage of skilled workers in this country must be compensated. The latter requires further automation of textile processing.

The German deep-tech start-up sewts, from Munich, has focused on the great potential that lies in this task. It develops solutions that will help robots anticipate how a textile will behave and adapt their movement accordingly, in a way similar to humans. In the first step, sewts has set its sights on an application for large industrial laundries, with a system that Automating laundry processes: overcoming workforce challenges with intelligent systems uses both 2D and 3D cameras from Imaging Development Systems (IDS).

sewts has developed a solution in which robots can anticipate how a textile will behave and adapt their movement accordingly (Credit: IDS)

IDS is automating one of the last remaining manual steps in large-scale industrial laundries, the unfolding process. Although 90% of the process steps in industrial washing are already automated, the remaining manual operations account for 30% of labour costs. e potential savings through automation are therefore enormous at this point.

Textile handling: how sewts system VELUM tackles automation challenges

It is true that industrial laundries already operate in a highly automated environment to handle the large volumes of laundry. Among other things, the folding of laundry is done by machines. However, each of these machines usually requires an employee to manually spread out the laundry and feed it without creases.

This monotonous and strenuous loading of the folding machines has a disproportionate e ect on personnel costs. In addition, a qualified workforce is di cult to nd, which often has an impact on the capacity utilisation and thus the profitability of industrial laundries. The seasonal nature of the business also requires a high degree of flexibility. sewts has made IDS cameras the image processing components of a new type of intelligent system. Its technology can now be used to automate individual steps, such as sorting dirty textiles or inserting laundry into folding machines.“

The particular challenge here is the malleability of the textiles,” explains Tim Doerks, co-founder and CTO at sewts. While the automation of the processing of solid materials, such as metals, is comparatively unproblematic with the help of robotics and AI solutions, available software solutions and conventional image processing often still have their limits when it comes to easily deformable materials.

Accordingly, commercially available robots and gripping systems have so far only been able to perform such simple operations as gripping a towel or piece of clothing inadequately. But the sewts system VELUM can provide this. With the help of intelligent software and easy-to-integrate IDS cameras, it is able to analyse dimensionally unstable materials such as textiles. Thanks to the new technology, robots can predict the behaviour of these materials during gripping in real time. It empowers VELUM to feed towels and similar linen made of terry cloth easily and crease-free into existing folding machines, thus closing a cost-sensitive automation gap.

Ensenso S: advanced 3D imaging and AI for precise textile material analysis

Equipped with a 1.6 MP Sony sensor, the Ensenso S10 uses a 3D process based on structured light. A narrow-band infrared laser projector produces a high-contrast dot pattern even on objects with di cult surfaces or in dimly lit environments. Each image captured by the 1.6MP Sony sensor provides a complete point cloud with up to 85,000 depth points.

sewts selected both 2D and 3D cameras from Imaging Development Systems (IDS) to help automate processes in large-scale industrial laundries (Credit: IDS)

Artificial intelligence enables reliable assignment of the laser points found to the hard-coded positions of the projection. This results in the robust 3D data with the necessary depth accuracy, from which VELUM extracts the coordinates for the gripping points.

The complementary IDS industrial camera with GigE Vision is equipped with Sony’s IMX264 global shutter sensor. It delivers near-noise free, high-contrast 5MP images in 5:4 format. e uEye CP camera offers maximum functionality with extensive pixel preprocessing and is perfect for multi-camera systems thanks to the internal image memory for buffering image sequences. At around 50g, the small magnesium housing is as light as it is robust and predestines the camera for space-critical applications and for use on robot arms.

With systems such as VELUM, laundries can significantly increase their throughput regardless of the staffing situation and thus increase their probability. “By closing this significant automation gap, we can almost double the productivity of a textile washing line,” assures sewts CEO, Alexander Bley.