The concept of the fourth industrial revolution, or Industry 4.0, has been around for more than a decade, with many big names such as Amazon, Siemens and Boeing early adopters of smart factory technology to make their production processes more efficient.

While many markets have been negatively impacted by Covid, manufacturing has been considered an essential or frontline service. Now, orders for automation equipment in manufacturing are coming thick and fast, and businesses are recognising a need for digital technologies to run more smoothly.

As an example, telecoms vendor Huawei last year launched its 5G digital engineering solution, based on the idea of site digital twins. It involves creating a digital replica of a physical site, so that factory infrastructure can be managed digitally throughout its lifecycle, from planning and design, to deployment and maintenance. Based on the digital twins, Huawei is using advanced photogrammetry and AI to help with digital network specification for telecoms.

This is something with which vision integration firm, Asentics, has been closely involved. The firm’s chief executive, Dr Horst Heinol-Heikkinen, is also chairman of the VDMA OPC Vision group, a joint standardisation initiative led by the VDMA and the OPC Foundation, which aims to include machine vision in the industrial interoperability standard, Open Platform Communications Unified Architecture (OPC UA). The open interface OPC UA was established as a standard in Industry 4.0, leading to the creation of the series of companion specifications to ensure the interoperability of machines, plants and systems.

Under control

The machine vision companion specification for OPC UA was launched to facilitate the generalised control of a machine vision system and abstract the necessary behaviour via a state model. The assumption of the model is the vision system in a production environment goes through a sequence of states that are of interest to, and can be influenced by, the environment. In addition to the information collected by image acquisition and transmitted to the environment, the vision system also receives relevant information from the environment. Because of the diversity of machine vision systems and their applications, other methods and manufacturer-specific extensions are necessary to manage the information flow. The vision companion specification is therefore an industry-wide standard that is designed to allow freedom for changes or individual additions. While part one of the companion specification focuses on the standardised integration of machine vision systems into automated production systems, part 2, currently in progress, will use this to describe the system and its components.

The focus will be on asset management and condition monitoring of these components. It’s not only image processing that is establishing a standard; the number of information models in general is increasing, with more companies and industry sectors getting involved. It is these models that form the foundation for the next goal: the digital twin.

- Building a twin - Greg Blackman explores the efforts underway to improve connectivity in factories

- Cloud business models will ‘not stop for industry’ - Greg Blackman reports on the progress being made in Industry 4.0

Asentics is heavily involved in this development process and is a member of the recently formed Industrial Digital Twin Association (IDTA). The aim of the association is to use the already established digital twin as an interoperable core technology for all future developments with regard to Industry 4.0. Heinol-Heikkinen is deputy chairman of IDTA. He says: ‘The digital twin is the key investment in the future viability and crisis resilience of mechanical engineering. It is also an effective tool for implementing all of those Industry 4.0 standardisation measures. But, above all, it is the enabler of our future business fields.’

Integrators remain vital

Industry 4.0 might lead to further demand for automation and vision systems, but integrating vision technology for manufacturing has been around long before Industry 4.0, as David Dechow, principal vision systems architect at vision integrator, Integro Technologies, can attest. ‘During the 40 years in which I have been working [with vision], there have been significant advances in machine vision systems,’ he says. ‘I think the growth and the capability of that technology has never before been as good as it is now. It is a fantastic enabling technology for other technologies within automation and what makes it fun is the diversity of what you work with, even the most challenging applications.’

In terms of whether new technology or concepts, such as Industry 4.0, means there’s a shift in the role of the machine vision integrator, Dechow is not so sure. ‘The machine vision industry has evolved,’ he says, ‘but end users look to developments like Industry 4.0 and smart manufacturing or the industrial internet of things in the same way they have always looked to machine vision integration: to improve their processes and make them more efficient.’

The more cutting-edge application areas, Dechow explained, as with all machine vision integration tasks, will still require a detailed process with steps for planning, design, implementation and deployment, but applications such as deep learning in industrial applications, for example, may require a larger team, or different skill sets than those used in standard machine vision.

‘While Industry 4.0 has a lot of buzz surrounding it,’ Dechow says, ‘with many articles warning that manufacturers who don’t adopt it early enough will fail, we need to make sure that this doesn’t dilute the end user’s understanding about what machine vision can do. They [end users] have always turned to machine vision integrators to improve their processes. It has always been our role to make the system work, even in more complex applications, using the most successful machine vision solution for their particular requirements.’

Seat of power

Likewise, Leoni Vision Solutions, part of Leoni Engineering Products and Services (LEPS), emphasises the important role that vision integrators play. The company has an in-house machine vision development laboratory to run feasibility studies, and ultimately create a custom solution.

One of the inspection systems Leoni has built is for checking seat mould assembly. This is a challenging vision task, where the system is used to verify components that have been encapsulated into each foam piece. The increasingly complex design and large variety of seat moulds requires an inspection system capable of adapting without interrupting the run.

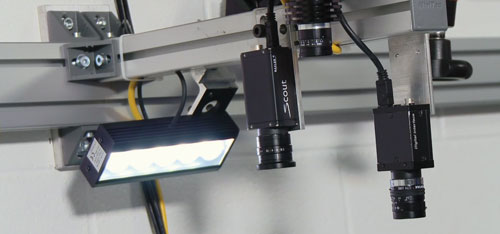

The inspection process begins right after the operators finish placing components into the cavity. One of the inspection system’s cameras captures images of predetermined points in the cavity where most variation occurs. The system uses a dedicated camera to measure the intensity of the mould, so that the main inspection cameras can be offset to create maximum contrast between the components and the mould itself.

Leoni Vision Solutions builds and integrates custom inspection systems. Credit: Leoni Vision Solutions

‘It additionally compensates for different seat geometries and mould depths by strobing an extraordinary amount of light and using a novel optics and lighting setup,’ says Jim Reed, vision product manager at LEPS. This increases depth of field, which enables the system to cover the full range of depth required to inspect all seats and moulds. ‘Whether the cavity is four, eight or 10 inches deep, we can keep that whole range in focus,’ he adds.

Next, the main inspection cameras verify that correct components are placed in the proper locations in the seat cavity. Once the system software confirms the presence of the components, it issues a pass or fail determination. When a mould passes, the system sends a signal to the programmable logic controller. The mould then travels to the robot pour station, where it’s filled with foam, the lids close and the feed is completed. If the system detects missing components, it alerts the controller to not pour that seat mould.

Deep learning can be incorporated for instances when moulds have handwriting or machining marks, or if they look different from one another, even if the machine is producing the same part. Previously, each mould had to be programmed individually, but by incorporating deep learning into the process, operators can program a single part number rather than every seat cavity in-house, saving a lot of time in the process.

Deep impact

While deep learning isn’t a magic bullet in industrial inspection, it can offer value, especially when features to be detected require subjective decisions like those that might be made by a human. ‘These systems are incredibly complicated from a vision standpoint, because of the sheer volume and variation between part numbers and models, as well as the programming and changes,’ says Reed. ‘One mould may have 30 inspections being performed on it, so if you take those inspections and multiply it by 200 part styles for 1,500 different models, you can quickly see how this becomes quite complex.’

One of the potential advantages of using deep learning to accommodate more variation in the part is there’s less time spent programming and maintaining the system. ‘These and many other advanced vision techniques,’ says Reed, ‘provide a wide range of integrated solutions for many potentially challenging applications, beyond just the mould insert verification and inspection.’

-

AT – Automation Technology develops 3D application for fruit and vegetable labelling

-

3D sensor technology from AT enables future-oriented food tracking

-

EcoMark relies on sensor solution from AT from the very beginning

By Nina Claaßen

Fast, precise, flexible: AT – Automation Technology has made an international name for itself with its 3D sensor portfolio and has always been one of the leading industry suppliers. The reason for this is the individual development of the 3D sensors, so that the company from northern Germany can offer a suitable solution for almost any application, which is then manufactured exactly according to the customer’s specifications – even without extra costs for custom manufacturing, without minimum order acceptance and also without long waiting times.

One company that benefits from these advantages of AT 3D sensors is EcoMark GmbH, which specialises, among other things, in labelling food directly on the tray via laser marking. On the one hand, it’s done to produce less plastic and packaging in the future, but on the other hand, to provide an effective process under the aspect of sustainability and environmental friendliness, which at the same time is also demonstrably economical. However, food branding required a great deal of technical know-how right from the start, as there were a number of important details to consider when labelling food.

Technical requirements for the 3D application of food branding

To be able to position the marking laser individually for each product and thus guarantee a 100% hit rate for the marking of groceries, an individual application was required. With the help of a 3D sensor, this application had to be able to determine the position of the fruit and vegetables on the conveyor belt. The challenge was to develop a 3D scan solution that is not only highly precise and fast, but also sends reliable data to the marking laser, despite changing measurement widths and different positions of the fruit and vegetables. AT’s solution: a product from the C5-2040CS sensor family. The 2040 sensors not only combine speed and accuracy, they also allow a measuring width of up to one metre, with a high resolution of 2,048 measuring points. Furthermore, they are virtually maintenance-free and require hardly any support, as they are already factory calibrated and can be easily integrated into any existing system via Plug&Play using their GigE Vision interface without much installation effort.

‘EcoMark is a perfect example of the unique diversity of AT’s 300+ sensor variants,’ explains Michael Wandelt, chief executive of AT. ‘Based on the customer’s technical data, we were able to deliver the right 3D sensor to meet their exact requirements. We always try to offer the best possible product for each of our customers to significantly benefit their processes and increase their effectiveness.’

Food branding is significantly more economical than packaging

However, the focus is also on the cost-effectiveness of this process optimisation. ‘We are very optimistic that the trend of food labelling directly on the tray will become established in the future,’ said Richard Neuhoff, chief executive of EcoMark. ‘Ultimately, this method is significantly cheaper than previous plastic solutions, as packaging costs are completely eliminated. We only incurred costs at the beginning of production due to the purchase of the labelling system, further followup costs are very low.’

Even before EcoMark developed machines for food branding, the company was already specialised in laser machines for marking any material. Due to years of expertise in this area, the idea of so-called natural branding was born in 2018, with which the company was able to attract global attention. EcoMark GmbH is now one of the largest international providers of food and natural branding. In general, their labelling machine marks up to 100,000 fruit products per hour, depending on the thickness of the tray and the nature of the variety. ‘Each fruit and vegetable product has a different size and, of course, a different peel, so we always have to be careful to find the best compromise between the visibility of the marking and the shelf life of the product,’ Neuhoff continues. ‘If the laser were set incorrectly, for example, the peel would be destroyed in the process, so you have to know very well what you are doing.’

Inside a branding machine

3D application with future

For the application that EcoMark has developed, it is irrelevant whether a kiwi or a cucumber is lying on the conveyor belt. The 3D sensor scans the fruit and vegetables flexibly and creates a 3D point cloud for each product within milliseconds, according to which the marking laser is then aligned. To date, AT EcoMark has already supplied 15 C5-2040CS sensors for the food branding application, with further sensors for other industries in the pipeline, as they have been in continuous use since first being integrated into the labelling system.

Further information www.eco-mark.de; www.youtube.com/watch?v=jpcbInyUtv0