Traceability is a key aspect of modern production that gives the history of an item as it passes through its production cycle. In the pharmaceutical industry, traceability – often referred to here as serialisation or track-and-trace – plays an important role in preventing the distribution of counterfeit drugs. With it, pharmacies at the end of the supply chain can scan codes on their products – and, using a central database – check their authenticity. This not only ensures patient safety, but also makes sure that all pharmaceutical goods being sold in a country are taxed. As of 2019, all pharmaceutical products distributed within the EU have to be serialised; the same is true for the US from 2018.

The age of the smart camera

While barcode readers have long been a go-to solution for traceability in pharmaceutical production, over the past 20 years there has been a gradual disappearance of the distinction between barcode readers and machine vision systems. When talking about traceability today – either using human- or machine-readable codes – a smart camera is typically used, according to John Agapakis, director of business development for traceability solutions at Omron Automation Americas.

A smart camera – unlike standard barcode readers – is able to read both machine- and human-readable codes, an important part of pharmaceutical traceability regulations to ensure consumers are able to read information on product packaging. Vision algorithms check for faded, marked, skewed, wrinkled, misprinted or torn codes on packaging.

Smart cameras also have the advantage of a wide field-of-view and higher resolution than barcode readers, meaning they can inspect large cases of products. Agapakis said that vision systems with cameras of up to 20-megapixel resolution can be used for this type of inspection, whereas barcode readers rarely get as high as five megapixels.

Their flexibility, combined with the fact that smart cameras have become smaller and more affordable over the past decade, means more can be deployed on pharmaceutical production lines. ‘It is now easy to position six to eight smart camera-based readers in a ring around a conveyor and network them in a daisy chain fashion in order to inspect the codes on pill bottles and other pharmaceutical products as they move down a production line,’ said Agapakis. ‘The cameras are all networked together and essentially perform as a single reader. All of them are triggered at once, and then whichever camera captures the code is the one that ends up sending the data to the pharmaceutical firm’s database.’

This 360-degree reading set-up, which according to Agapakis is common in serialisation applications, enables bottles of any orientation to be scanned, which means that time does not have to be spent spinning every single one to make sure it’s facing a certain direction for a single camera. As a result, up to 600 bottles per minute can be inspected using this set-up. Vision processing functionality is now powerful enough to read low-contrast codes marked directly on products, which is significant for the medical device industry, as new regulations dictate that information that uniquely identifies each device – a unique device identifier or UDI – must be assigned to all medical devices. In the case of reusable devices or instruments, this data has to be permanently marked on the device using a data matrix 2D code. Surgical instruments or implants will have these codes, usually marked with a laser and often much lower contrast than codes printed on labels. In addition, data matrix codes on spinal implants or on electronic components can have cell sizes as small as 0.05mm. Reading these reliably and at speed is now possible thanks to smart camera technology.

Traceability 4.0

Agapakis gave a presentation on advances in unit-level traceability in the life science industries as part of the AIA’s recent Vision Week.

He explained to attendees that for the past 20 years code reading technologies have enabled what is called ‘unit-level traceability’ to become prevalent in virtually all industries. This involves tracking which components have been used in which assemblies, and which parts have gone through a particular process step at a particular time. He said that gradually, however, some of the more enlightened users and early adopters of this technology have started combining part IDs with process and quality data – obtained using cameras and other sensors – to optimise the production processes themselves.

Omron believes that this use of data to both track products and improve processes marks a new phase in industrial traceability, which it has called Traceability 4.0.

‘Product tracking, component genealogy and supply chain visibility are all key traceability goals. Traceability 4.0 is the union of all these, along with machine and process parameters to improve overall manufacturing effectiveness,’ Agapakis explained. ‘We have now evolved beyond the point where just part ID information is being captured, and now can have a rich set of process data also captured and stored on servers on the line and in the cloud with which processes can be optimised.’

Omron’s MicroHawk smart camera being used to read barcodes on pharmaceutical bottles

Although some manufacturers are already employing Traceability 4.0, it represents the future for the majority of manufacturers, he added.

‘Manufacturers can now know everything there is to know across their enterprise about a part or product, including its complete genealogy,’ he continued. ‘Substantial improvements therefore come to light in many areas with Traceability 4.0. The ability to identify specific product failures and production bottlenecks with detailed operating parameters and conditions enables faster and more precise root cause analysis. The potential diagnostic scenarios are virtually limitless.’

In an advanced state, Traceability 4.0 systems will be able to make decisions that optimise equipment and processes based on acquired data, including automatic predictive maintenance. This will be facilitated by smart cameras and sensors, artificial intelligence (AI) controllers, RFID and advanced data management software. ‘This process knowledge can then lead to improvements in other facilities across the enterprise and around the world,’ Agapakis said.

AI for OCR

Over the past decade, more processing power and memory on vision systems means that now machine and deep learning algorithms can be run on them. Omron recently made the announcement that its FH line of machine vision systems have built-in AI inspection capabilities. However, rather than requiring thousands of images and a GPU-based processor to train it – as is sometimes the case when using AI – only about 100 ‘good’ training images are sufficient, and this can all be done on the machine vision system controller itself.

Other Omron machine vision systems already used in pharmaceutical applications – such as the OCR tool in the firm’s MicroHawk smart cameras – incorporate aspects of AI technology.

In addition to reducing the time required to set up applications, deep learning-based OCR will increase the robustness of detection and recognition compared to standard OCR methods.

This was the view of Thomas Hünerfauth, product owner for deep learning in the Halcon software library at MVTec – the company plans to release intelligent OCR functionality, based on deep learning, in November.

‘We already offered something similar in Halcon 13, but the detection aspect was not based on deep learning, only the recognition aspect,’ he said. ‘Now we are taking a new approach for OCR, which is completely based on deep learning technology.’

He explained that the software will take a general approach to OCR that is independent of font type and doesn’t require many parameters to be configured. The method, called anomaly detection, won’t need thousands of images to train the model, but can be trained using a small number of ‘good’ images.

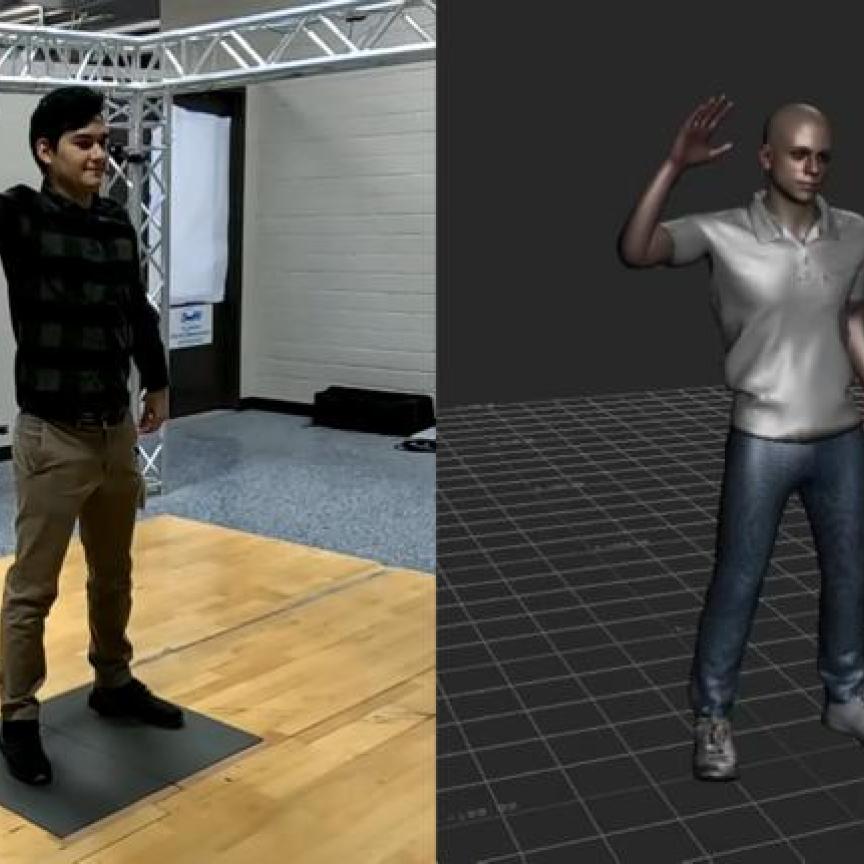

Multiple smart cameras networked together can be used to scan the codes on bottles of any orientation as they move along a conveyor. Credit: Omron

‘When using anomaly detection, you don’t need to know what a defect looks like, but you do need to know what an acceptable result should look like,’ Hünerfauth explained. ‘An anomaly score is then outputted with each image, which represents the possibility of whether there’s an anomaly present. The area where the anomaly is detected can then be segmented for further inspection.’ The caveat to this method, however, is that because so few images are used, the environmental conditions under which the imaging takes place must be kept stable. This is because, for example, if sunlight were to enter through a nearby window and cover the imaging scene, this would flag up as an anomaly.

A black box of uncertainty?

It could be a while before a full uptake of deep learning-based OCR is seen in industry, however.

‘As it stands, the majority of smart cameras used in industry do not have high enough performance to facilitate deep learning-based OCR at the speeds required,’ said Hünerfauth. ‘However, more and more camera providers are now considering special chips for AI inference, so this is something we have in mind – supporting dedicated hardware for AI.’

MVTec supports GPUs from Nvidia and is working on supporting further hardware. ‘All providers of smart cameras have deep learning on their agenda, and in a few years I’m sure that they will support it in their product ranges – it’s just a matter of time,’ Hünerfauth added.

It’s not just hardware that could delay the widespread adoption of deep learning-based OCR in industry, however.

‘The pharmaceutical industry is very sensitive regarding security and transparency of the inspection technology used,’ said Hünerfauth. ‘It’s a matter of fact that deep learning is still a black box of uncertainty waiting to be opened, and I think this is a reason why a lot of companies, not only in pharmaceuticals but also in other fields, are still a little bit hesitant in trusting this new technology and bringing it to production. It is therefore necessary to invest in opening this black box and providing clarity and understanding into the reasons why deep learning reaches the conclusions that it does.’

This acceptance will take time, Hünerfauth continued, adding that currently a lot of customers are using deep learning in parallel to their traditional approaches and are comparing the results, which he says will develop further trust in the technology. In addition, to help open the black box, MVTec has developed certain tools that it believes will make deep learning more understandable. For example, one of them is a heat map that highlights the section of an image that was used by the deep-learning model to inform its decision when it detects an anomaly, which technicians can then inspect further to increase their understanding of the technology.

While deep learning is set to increase the ease of setting up traceability applications, there are still some trivial points that if overlooked will affect the results of the technology in a detrimental way, according to Hünerfauth: ‘All it takes is a few images to be labelled incorrectly and the detection result will be worsened. A lot of people are still not aware that data is the new aspect to concentrate on. You have to treat your data well. Labelling is a very important task, and from our experience if done incorrectly is the main reason why deep learning applications fail.’

While MVTec is currently choosing to concentrate on deep learning-based OCR for industry, it does have barcode and 2D code recognition in mind for the future, so end-users can expect traceability to receive the full deep learning treatment in due course.

Covid-19 puts pressure on pharma production

Covid-19 has put a lot of pressure on pharmaceutical production facilities in terms of demand and flexibility. The industry has experienced spiking demand for equipment such as test kits, masks and sanitiser. Once vaccine productions begin, the healthcare sector will need to gear up to meet another round of unprecedented demand.

According to robot vision firm Pickit, this is triggering pharmaceutical leaders to think about what more should be automated in their production for faster, safer results. Lines must become more agile, with the capability to respond to changing demands quickly.

‘This represents a great opportunity for the implementation of 3D imaging, which plays a big part in facilitating automation,’ said Tobias Claus, Pickit’s account manager for Germany. ‘Automation enables less human intervention on a production line, which makes it easier to maintain appropriate distancing between personnel. Having less personnel on the production line also reduces the risk of contamination, which is especially significant in the pharmaceutical industry.’

Pickit worked with robotics firm Essert to develop a picking solution for transparent pharmaceutical syringes

He explained that many companies who were considering automation have since started to take action, and are now conducting research into automation technologies such as 3D imaging.

Pharmaceuticals creates a number of challenges for 3D imaging, namely the need for very fast cycle times because of the large volume of parts being produced. The variability of parts is also very high, according to Claus: ‘For example there are over one thousand different types of syringe alone.’ Pharmaceutical parts are also either transparent, delicate or small, which each creates unique challenges for 3D vision.

In particular, transparency has caused some major headaches for 3D vision experts, to the point where Claus believes it is still not possible to solve many applications in the pharmaceutical industry with 3D vision when transparent parts are involved.

Pickit recently worked with robotics firm Essert for a client receiving transparent syringe bodies delivered as bulk goods in containers or boxes. Employees would then have to manually move the syringes into trays. ‘Material separation for further processing works relatively easily with tablet blisters or rectangular pharmaceutical packaging,’ said Claus. ‘However, when working with Essert and on first seeing the transparent syringes, we immediately thought that we wouldn’t be able to solve this challenge.’

The syringes weren’t entirely transparent, however, as they were equipped with dark stoppers and caps, which was enough for Pickit to identify them using a high resolution camera from Zivid in combination with its own smart matching algorithms. Essert integrated these with a two-arm robot to produce a fast and reliable automated picking system.

3D vision could be used to identify the transparent syringes thanks to the dark stoppers and caps. Credit: Pickit

‘We are now able to recognise small, transparent syringes with fast cycle times – around four seconds to pick and place each syringe,’ said Claus. ‘This is ideal, as, if you are looking to automate an application, you must have a cycle time below 10 seconds. The pharmaceutical industry demands even faster times than this, however, due to the sheer volume of products involved.’

In order to reduce these cycle times and improve picking accuracy even further, Claus said that more advanced 3D cameras – which Pickit anticipates coming soon – faster image processing algorithms, and further optimisations around robot arm and gripper movements, will be required.

‘All in all, we are only at the beginning of coordinating 3D vision hardware and software with the needs of the pharmaceutical industry,’ he concluded. ‘Of course, speed and reliability can be further improved. Pickit is currently developing new innovative vision engines based on both 3D and 2D information that will improve the performance of applications manipulipulating transparent bottles and syringes.’