Embedded computing power is increasing and more devices are appearing on the market. In recent years, machine vision camera makers have been getting involved by their own embedded camera or sensor modules that fit a particular board, or by working with computer hardware providers.

Jan-Erik Schmitt, vice president of sales at Vision Components, believes it has never been as easy to integrate embedded vision into any device as it is currently. ‘Developers just need to choose the perfect level of integration for their projects,’ he said.

Hardware is one consideration. Typically, selecting hardware components depends on: required performance (CPU, GPU or NPU); choice of operating system; real-time or latency requirements; power consumption; resolution and image capture rate; interfaces necessary; availability of hardware and vendor support; ease of use and ease of development – especially when deciding between an FPGA- or CPU-based approach; and how much the developer knows about their choice of hardware.

Nathan Dinning, director of product management at Framos, sees the core technology – the processor – as the starting point, with a good understanding of the required vision capabilities in terms of 2D, 3D, whether megapixel resolution is needed, what the field of view requirement is, or how high the dynamic range must be. Finally, the specific nature of the application must be considered – for example, will industrial components for a specific temperature range or environment be needed?

However, from the perspective of Neil Chen, senior manager, video solution master division within the Cloud-IoT group of Advantech, the software definition is always the primary priority. ‘The service and data flow between edge and cloud will drive different hardware architectures,’ he said. Chen then noted performance, price-per-watt and optics integration, including sensor, illumination and lens as key factors.

In the past he said that embedded systems were mainly seen as applicable to stand-alone systems. But with the wider application of AI within embedded vision, broader deployment opportunities beyond stand-alone systems are available.

This requires different software architecture that will change the core idea of embedded vision. Typically, the user or programmer would think only about the run time of the embedded vision system, but now they must think about both programming and also the development and deployment of embedded vision.

Design criteria

‘Traditionally, vision sensor firms have developed their cameras for specific applications from scratch,’ said Schmitt. ‘This is very time- and cost-intensive, with high design risks.’ Companies now need to decide if this is worth the effort, or if they can benefit from shorter development cycles and spending less on time and costs if they use ready-to-deploy solutions, such as the VC PicoSmart. From the point of view of Vision Components, the benefits from proven hardware and perfect integration of image acquisition and processing are undeniable.

Vision Components has also recently released an FPGA-based hardware accelerator, VC Power system on module (SoM). This 28 x 24mm SoM can be deployed between Mipi camera sensors and the embedded processor of the final application. The FPGA module can tackle pre-processing or analysis of the image data, leaving the higher-level CPU free to focus on the application.

For Florian Schmelzer, product marketing manager for embedded vision solutions at Basler, it is all about the use case. ‘When designing embedded vision systems for our customers it is absolutely mandatory to understand the use-case of the application. What problem is the customer trying to solve?’ he said. ‘As there are always many different ways to realise a system – such as the type of camera sensor, interface, processor unit, software and so on – we need to be clear what the goal of the system is. Only then is it possible to develop the leanest solution with the best price-to-performance ratio.’

Chen has software support – including the operating systems, third party software package and programming language – at the top of his list, followed by the data interface between camera and processor, power consumption and the application-driven image signal processor (ISP).

‘Our strategy for embedded vision systems is to link up with the AI opportunity,’ he concluded. Taking advantage of AI means creating more partnerships with ISP providers and adapting software design and features.

A changing market

But how are these choices and changing criteria impacting the market? Chen finds standardisation and modularisation as the most significant migrations in machine vision currently. ‘In the past, it took multiple different engineering resources to design and build the embedded vision system, and this resulted in a high entry barrier both for customers and makers,’ he said.

With more advanced computer vision technology and embedded vision and AI alliances, more camera vendors are releasing standard embedded vision cameras. The AI system on chip (SoC) or system on module (SoM) companies offer not only scalable AI engine SoMs in the same form factor, but also the software development kit and board support package, so that learning curves are reduced and the time-to-market is accelerated.

Chen believes this has led to a more user-friendly market. ‘In the past, embedded meant difficult, more proprietary technology. It took a lot of resources and time to get to market, but the products enjoyed a long lifecycle. Now with standardised, modular systems, time-to-market is better and lifecycles are shorter,’ he stated. This reduces prices, making the market more accessible – both for the programmer and user.

In terms of vision sensor design, Schmitt said that the new technologies and components being developed enable faster time-to-market at lower costs for individually designed sensors. He cited the VC PicoSmart as one of the smallest complete embedded vision systems on a single board. It integrates image sensor, CPU and memory; developers just need to add optics, lighting, interface board and the human-machine interface to build a vision sensor for their application.

‘More generic PC-based approaches are being replaced by a more specialised embedded approach, which can drive down system costs significantly,’ said Schmelzer. He said embedded vision offers both cheaper solutions for existing applications and new vision solutions, such as those in medical diagnostics, robotics and logistics, traffic management systems, and products for smart home applications.

Supply chain issues

Of course, one issue that is making an impact is the global shortage of semiconductors, something that is not expected to resolve itself in the near-term. Schmitt said that Vision Components has largely been able to absorb the effects of the supply shortage for customers, thanks to long-term purchasing and forward planning. But the firm sees increasing requests for specific sensors, especially in combination with Mipi CSI-2 interface capability. ‘In particular, with the special design of our VC Mipi camera modules and an integrated adapter we can supply Mipi modules with almost any sensor, even if they do not natively support this interface,’ he said.

A key aspect is designing products that take into account component supply chains, said Dinning. Companies are getting involved in long-term supply contracts; some are taking on more risk or holding more inventory.

‘The global shortage and Covid lockdowns impacted the whole supply chain,’ said Chen. ‘We spent lots of time, resources and expense to sustain our existing models, but meanwhile, we needed to strengthen our design and production capability to be more agile.’

One of the results of this agile approach is the industrial AI camera, ICam-500. ‘This model, which goes into mass production in May, follows the standard video interface, certifies multiple chip vendors, and creates and offers more features on software,’ Chen said. The redesign approach means that Advantech could push more technology from hardware back to middleware, giving more value, multiple vendor choice and more features.

Chen sees software design as a key feature for increasing integration. ‘The software stack is increasingly important,’ he said. The need for software to be able to connect with different operating systems is a major change from most existing systems, and concepts such as embedded systems as a microservice are important.

Embedded World

Basler, Framos and Vision Components will be exhibiting at the Embedded World trade fair, taking place from 21 to 23 June in Nuremberg, Germany.

Basler (2-550) will demonstrate an edge AI vision solution for automated optical inspection; it will also show an approach for anomaly detection that finds defective areas using cloud services. In a classification task, AI and imaging are used to classify bacterial samples. A case study in 3D imaging for circuit board inspection rounds up the trade show presentation: using fringe light projection and a self-developed algorithm, a 3D height image is created that is suitable, among other things, for those applications that require hardware-accelerated image preprocessing.

Basler’s product highlight is its embedded vision processing board. It includes various interfaces and is based on a system on module and carrier board approach, using the i.MX 8M Plus system on chip from NXP. It can be used not only for prototyping, but also in volume production, thanks to its industrial grade design.

Framos (2-555) will demonstrate its ability to integrate Sony’s sensor technology across a multi-platform ecosystem, including Nvidia Jetson, Xilinx Kria, Renesas RZ/v2m and Texas Instruments Jacinto. In addition, there will be an Nvidia Jetson zone, highlighting time-of-flight 3D depth maps, a development kit for event-based vision sensors, as well as the GMSL3 interface, all running on Nvidia’s Jetson platform.

Visitors can see imaging components from Sony, including the latest sensors with SLVS-EC solutions, Spresense microcontroller boards with LTE support, and MOLED image display products. Framos will also show its embedded vision standard kits, which include EMVA1288-certified sensor modules, lenses, drivers and adapters. In addition, Framos will highlight its camera system production capabilities.

AMD-Xilinx (3A-239) will be showing new accelerated apps for Kria SOM, including a new 10GigE vision camera, AI acceleration of driver monitoring systems, and Yolo v5 real-time AI inference processing with multiple cameras. The demonstration with the 10GigE camera uses a 122fps Sony IMX547 global shutter sensor; a Framos SLVS-EC v2.0 IP, which ingests 2 x 5Gb/s; and Sensor-to-Image 10GigE Vision MAC to SFP+. The image data is processed in the FPGA.

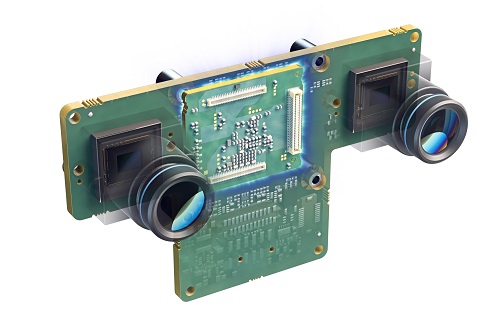

Vision Components (2-450) will present Mipi modules and OEM components. The firm will launch the VC Stereo Cam for 3D and two-camera applications. This stereo camera is based on the FPGA hardware accelerator, VC Power SoM, which can process large data volumes in real time. It captures images via two Mipi camera modules and executes image pre-processing routines including, for example, 3D point cloud generation.

Vision Components VC Stereo Cam

New Mipi camera modules will also premiere at the trade fair, integrating various global shutter sensors from the Sony Pregius S series with minimal noise and high light sensitivity: IMX565, IMX566, IMX567 and IMX568. The modules with a Mipi interface, trigger input and flash trigger output are designed for easy connection to common, single-board computers.