SLAMcore, a UK developer of Simultaneous Localisation and Mapping (SLAM) algorithms for robots and drones, has raised $5m in funding in order to help it deliver its technology to market.

The funding was led by global technology investor Amadeus Capital Partners, with existing investors Toyota AI Ventures and the Mirai Creation Fund also joining the funding round, in addition to newcomers MMC Ventures and Octopus Ventures.

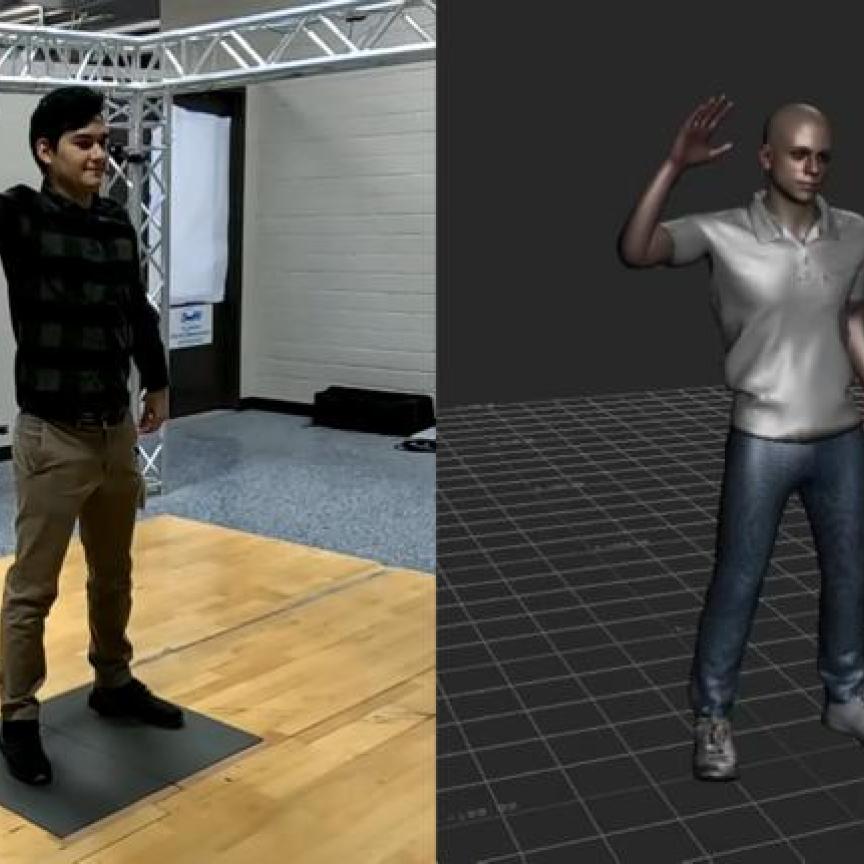

SLAM algorithms are used by robots and drones to acquire spatial intelligence, enabling them to accurately calculate their position, understand unfamiliar surroundings, and navigate with consistent reliability.

‘BIS Research estimates the global SLAM technology market to be worth over $8 billion by 2027,’ commented Amelia Armour, principal of Amadeus Capital Partners. ‘This funding round will enable SLAMcore to take its spatial AI solution to that growing market and we expect demand for its affordable and flexible system to be high. Having backed SLAMcore at the start, we’re excited to be investing again at this critical stage for the company.’

SLAMcore spun out from the department of computing at Imperial College London in early 2016 and closed its first funding round in March 2017. Headquartered in London, UK, the company has grown to a team of 15 with a range of expertise in designing and deploying spatial algorithms for robots.

‘The robotics revolution may seem just around the corner but there is still a big gap between the videos we see on the internet and real-world robots,’ said SLAMcore CEO Owen Nicholson. ‘SLAMcore is helping robot and drone creators to bridge the gap between demos and commercially-viable systems.’

‘It is a really exciting time for robotics,’ added SLAMcore co-founder Dr Stefan Leutenegger. ‘We are seeing a convergence of geometric computer vision algorithms, availability of high-performance computational hardware, and deep learning. We are embracing this new world and will move quickly towards offering solutions for robots requiring an advanced level of understanding of their environment.’

‘Our initial product will calculate an accurate and reliable position, without the need for GPS or any other external infrastructure, but that is just the start,’ Nicholson continued. ‘With this funding, we will also develop detailed mapping solutions capable of creating geometrically-accurate reconstructions of a robot’s surroundings in real-time, and understanding the objects within, utilising the latest development in machine learning.’

Related Articles

Vision cleans up: Dyson robot vacuum navigates with imaging